Orientation and First Weeks

The first week was dedicated to onboarding and getting an understanding as to what I will be working with. I had been tasked to write a document detailing the very same project I would be working on and the data set that it would be pulling from . In order to write such a document, I had to learn a lot such as:

What the data set actually contains

What the project is even supposed to do

How the project uses the data set

By being tasked with explaining the points above, I gained a deep understanding about the project that I would be working on.

The second week was a lot of messing around in a Linux environment. Before starting this co-op, I actually had very little experience working with Linux. At the start, I was given a demonstration of what Linux could do in a terminal and some commands that were used. I would have to provide a similar sort of demonstration on the following week. Here, I had a lot of fun just messing around in a Linux environment and trying out a bunch of commands. The self-study nature of the task really helped me develop a familiarity with the Linux environment, compared to before the co-op where I had little to no experience.

Learning and Adaptation

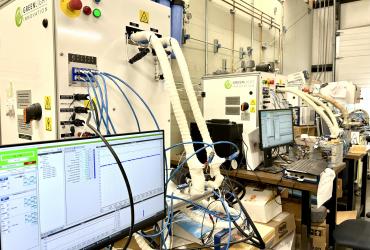

There was definitely a lot to learn during my co-op. I had little background in the field of research I was participating in. The self-study approach for onboarding really helps develop a deep understanding as to what I would later be working on. As the ΔE+ Research Group is a team of researchers, I would be working with multiple people as a research assistant. A few weeks into my Spring 2023 work term, I began work with a different researcher who had been working on a different project . This meant another onboarding, as I had to understand what the project would entail.

Adaptation is something to get used to especially with in research work. There can be a lot of experimentation, which in turn, can bring up a lot of roadblocks. When the results are unsatisfactory, the methods used to produce the results are switched up, which could potentially create new concerns. For example, I may try to bring in a new data set that contains a more accurate record of wind speeds at a location, but that data set uses a completely different format for storing its data. Now I have to find a way to utilize this new format of data in order to work with it.

Accomplishments and Challenges

There was this one particular task I worked on that was both a highlight and a huge challenge for me. I have been using a Python module called atlite in order to work with a data set called ERA5, which contains hourly wind speeds at some latitude and longitude. Now say for whatever reason, using these wind speeds for calculations produces poor results and I want to try scaling the wind speeds before running through calculations. It did not sound particularly difficult at first, but there were a lot more underlying issues at hand.

The main issue stems from the fact that the calculations have to be done using the atlite cutouts, I cannot simply use a normal Python list to do the calculations. As such, I have to follow the exact format of data storage that the cutouts use, which uses an xarray dataset object. The problem with values in xarray datasets is that it is incredibly difficult to modify the values stored inside, which are stored in xarray dataarrays. Trying to directly set values would either spit out an error, or not do anything.

There was a lot of trial and error, but I eventually managed to modify the values in the dataset by copying the wind dataarray object and modifying the values in that copy. I learned that It is possible to modify values if it just a standalone dataarray object, it was just a problem when it was also nested inside the dataset. Finally, I could overwrite the old wind dataarray with the modified one by assigning over it. Now I had an atlite cutout which contained scaled wind speeds that would later be used for calculations.

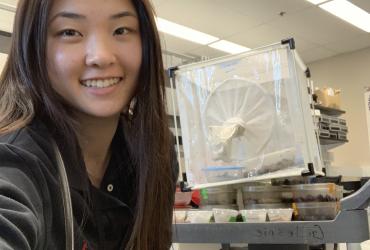

Here is a cool visualization of an incredibly small snippet of the ERA5 data set that I have been working with